Nagios can be configured to send out alerts on the state of the host or host service being monitored via email. This guide will, therefore, take you through how to Configure Nagios Email Notification using Gmail.

The current state of a service or host being monitored is determined by the status of the service or host which can be OK, WARNING, UP, DOWN, etc. and the type of state the service or host which can hard or soft.

Read more about notification on Nagios notification.

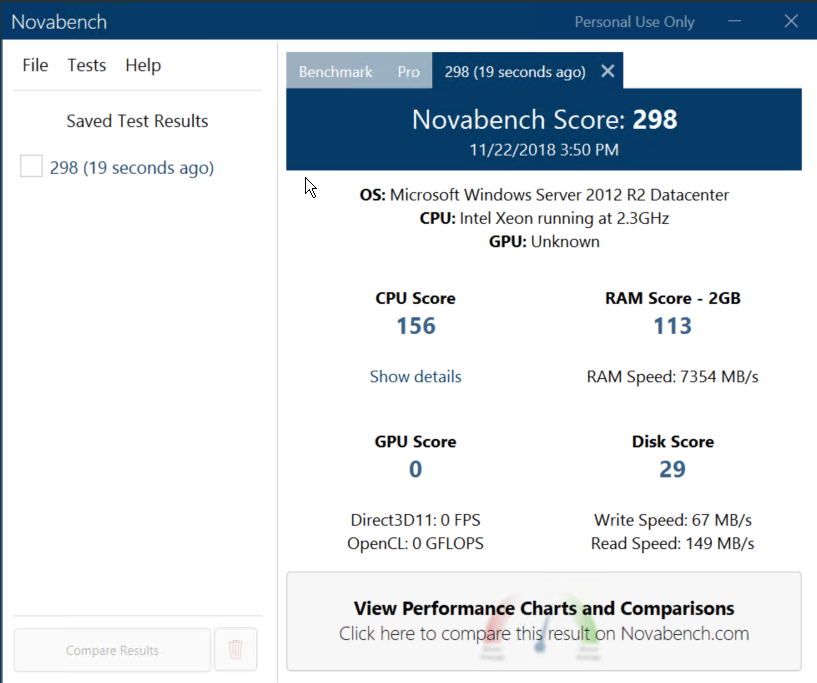

Before you can proceed, install Nagios and add hosts to be monitored.

Configure Nagios Email Notification Using Gmail

Install Required Mail Packages

In this guide, we are going to use Postfix as an outMail Transfer Agent (MTA). Also, by default, Nagios Mail notification is sent using the mail command. Hence, run the command below to install the required packages.

Yum install postfix cyrus-sasl-plain mailx -y

Configure Postfix to Use Gmail Relay

Enable STARTTLS encryption by changing the line smtp_tls_security_level = may to smtp_tls_security_level = encrypt.

sed -i 's/smtp_tls_security_level = may/smtp_tls_security_level = encrypt/' /etc/postfix/main.cf

If the smtp_tls_security_level option is not set, just insert it;

echo "smtp_tls_security_level = encrypt" >> /etc/postfix/main.cf

Define the path to CA certificates. The public root certificates are usually found under /etc/pki/tls/certs/ca-bundle.crt on RHEL derivatives and /etc/ssl/certs/ca-certificates.crt on Debian/Ubuntu systems.

echo "smtp_tls_CAfile = /etc/pki/tls/certs/ca-bundle.crt" >> /etc/postfix/main.cf

Next, insert the following lines to the Postfix configuration file to define the Gmail relay host and SASL options.

cat >> /etc/postfix/main.cf << EOF

relayhost = [smtp.gmail.com]:587

smtp_sasl_auth_enable = yes

smtp_sasl_password_maps = hash:/etc/postfix/sasl_passwd

smtp_sasl_security_options = noanonymous

EOF

Configure SASL credentials for your Gmail account.

vim /etc/postfix/sasl_passwd

Enter the following content, replacing the userid and password accordingly.

[smtp.gmail.com]:587 userid@gmail.com:password

Generate Postfix lookup table from the /etc/postfix/sasl_passwd file.

postmap /etc/postfix/sasl_passwd

Change ownership and permission to of the /etc/postfix/sasl_passwd to root and read-write only respectively.

chown root:root /etc/postfix/sasl_passwd*

chmod 600 /etc/postfix/sasl_passwd*

Start and enable Postfix

systemctl enable postfix --now

Test the relay;

First, allow less secure apps access to your Gmail account.

After that, try to send a test mail.

echo "Test Postfix Gmail Relay" | mail -s "Postfix Gmail Relay" userid@gmail.com

You should be able to receive the mail on your inbox. You can also check the mail logs. The log filename may be different for your case.

tail -f /var/log/maillog

Jan 19 15:01:44 dev-server postfix/smtp[5109]: C7E8C3B5AD: to=userid@gmail.com,relay=smtp.gmail.com[74.125.200.109]:587, delay=18, delays=0.04/0.02/16/2.1,dsn=2.0.0, status=sent (250 2.0.0 OK 1571511704 h8sm11800598pfo.64 - gsmtp)

Jan 19 15:01:44 dev-server postfix/qmgr[4574]: C7E8C3B5AD: removed

Create Nagios Contact Object Definition

The first step in configuring is to create a Nagioscontacts group that defines who should be notified on the state of a monitored service or host.

Nagios comes with a default contact group, contacts.cfg, located on the default objects definition configurations directory, /usr/local/nagios/etc/objects.

You can modify the default contacts definition configuration file or create your own.

Verify Nagios Configuration file

/usr/local/nagios/bin/nagios -v /usr/local/nagios/etc/nagios.cfg

If there is no syntax error, restart Nagios service.

systemctl restart nagios

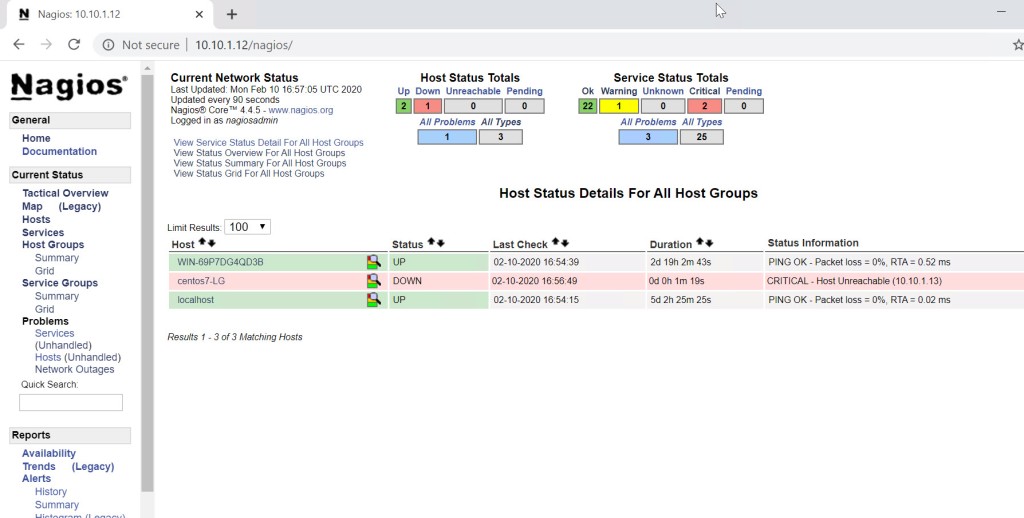

Testing Nagios Mail Alerts Notification

- To test if mail notification works, first change the IP address of one of the hosts to an IP that is unreachable such that it looks like the host is down.

- Reschedule the next check for the host state. This will automatically send out an email alert on host DOWN.

If you encounter the error below when rescheduling checks,

Error: Could not open command file ‘/usr/local/nagios/var/rw/nagios.cmd’ for update!

This is due to SELinux. To fix, run journactl -xe. It should show some SELinux commands to execute to fix this. The commands below is what i run myself.

ausearch -c 'cmd.cgi' --raw | audit2allow -M my-cmdcgi

semodule -X 300 -i my-cmdcgi.pp

Also, you may encounter the error;

Could not open command file ‘/usr/local/nagios/var/rw/nagios.cmd’

Run the command below to fix it.

chcon -R -t httpd_sys_script_rw_t /usr/local/nagios/var/rw

You should be able to manually reschedule Nagios host or service checks.

You should now get the email alert on the host being down.

Put back the right server IP and reschedule the check to now. You should be able to get the host status UP alert on the mail.

That is just it on how to configure Nagios email notification using Gmail. You should be able to receive alerts for service/host state changes.